|

Regression analysis

is used to model the relationship between a response

variable and one or more predictor variables. STATGRAPHICS Centurion

provides a large number of procedures for fitting different

types of regression models:

1.

Simple Regression - fits

linear and nonlinear models with one predictor.

Includes both least squares and resistant methods.

2.

Box-Cox Transformations -

fits a linear model with one predictor, where the Y

variable is transformed to achieve approximate

normality.

3.

Polynomial Regression

- fits a polynomial model with one predictor.

4.

Calibration Models -

fits a linear model with one predictor and then

solves for X given Y.

4.

Multiple Regression -

fits linear models with two or more predictors.

Includes an option for forward or backward stepwise

regression and a Box-Cox or Cochrane-Orcutt

transformation.

5.

Comparison of Regression Lines

- fits regression lines for one predictor at each

level of a second predictor. Tests for significant

differences between the intercepts and slopes.

6.

Regression Model Selection

- fits all possible regression models for multiple

predictor variables and ranks the models by the

adjusted R-squared or Mallows' Cp statistic.

7.

Ridge Regression - fits a

multiple regression model using a method designed to

handle correlated predictor variables.

8.

Nonlinear Regression -

fits a user-specified model involving one or more

predictors.

9.

Partial Least Squares - fits

a multiple regression model using a method that

allows more predictors than observations.

10.

General Linear Models - fits

linear models involving both quantitative and

categorical predictors.

11.

Life Data Regression -

fits regression models for response variables that

represent failure times. Allows for censoring and

non-normal error distributions.

12.

Regression Analysis for

Proportions - fits logistic and probit

models for binary response data.

13.

Regression Analysis for Counts

- fits Poisson and negative binomial regression

models.

Simple Regression

The simplest

regression models involve a single response variable Y

and a single predictor variable X. STATGRAPHICS will fit

a variety of functional forms, listing the models in

decreasing order of R-squared. If outliers are

suspected, resistant methods can be used to fit the

models instead of least squares.

Comparison of

Alternative Models

|

Model |

R-Squared |

|

Squared-Y

reciprocal-X |

87.75% |

|

Reciprocal-X |

87.11% |

|

Square root-Y

reciprocal-X |

86.71% |

|

S-curve model |

86.27% |

|

Double reciprocal |

85.25% |

|

Reciprocal-Y

logarithmic-X |

84.99% |

|

Multiplicative |

84.98% |

|

Logarithmic-X |

84.77% |

|

Squared-Y

logarithmic-X |

84.36% |

|

Reciprocal-Y

square root-X |

81.69% |

|

Logarithmic-Y

square root-X |

81.21% |

|

Square root-X |

80.54% |

|

Squared-Y square

root-X |

79.68% |

|

Reciprocal-Y |

76.73% |

|

Exponential |

75.87% |

|

Square root-Y |

75.37% |

|

Logistic |

75.08% |

|

Log probit |

75.03% |

|

Linear |

74.83% |

|

Squared-Y |

73.63% |

|

Reciprocal-Y

squared-X |

64.37% |

|

Logarithmic-Y

squared-X |

63.05% |

|

Square root-Y

squared-X |

62.34% |

|

Squared-X |

61.60% |

|

Double squared |

60.04% |

Box-Cox Transformations

When the response

variable does not follow a normal distribution, it is

sometimes possible to use the methods of Box and Cox to

find a transformation that improves the fit. Their

transformations are based on powers of Y. STATGRAPHICS

will automatically determine the optimal power and fit

an appropriate model.

Polynomial Regression

Another approach to

fitting a nonlinear equation is to consider polynomial

functions of X. For interpolative purposes, polynomials

have the attractive property of being able to

approximate many kinds of functions.

Calibration Models

In a typical

calibration problem, a number of known samples are

measured and an equation is fit relating the

measurements to the reference values. The fitted

equation is then used to predict the value of an unknown

sample by generating an inverse prediction (predicting X

from Y) after measuring the sample.

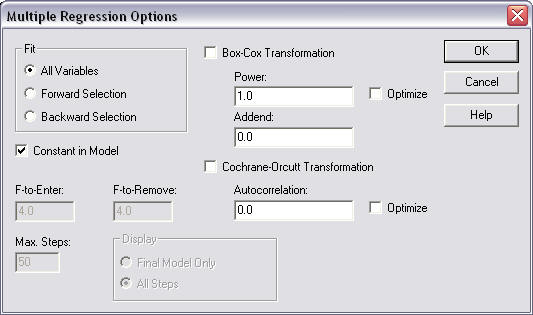

Multiple Regression

The Multiple

Regression procedure fits a model relating a

response variable Y to multiple predictor variables X1,

X2, ... . The user may include all predictor variables

in the fit or ask the program to use a stepwise

regression to select a subset containing only

significant predictors. At the same time, the Box-Cox

method can be used to deal with non-normality and the

Cochrane-Orcutt procedure to deal with autocorrelated

residuals.

Comparison of Regression Lines

In some situations,

it is necessary to compare several regression lines.

STATGRAPHICS will fit parallel or non-parallel linear

regressions for each level of a "BY" variable and

perform statistical tests to determine whether the

intercepts and/or slopes of the lines are significantly

different.

Regression Model Selection

If the number of

predictors is not excessive, it is possible to fit

regression models involving all combinations of 1

predictor, 2 predictors, 3 predictors, etc, and sort the

models according to a goodness-of fit statistic. In

STATGRAPHICS, the Regression Model Selection

procedure implements such a scheme, selecting the models

which give the best values of the adjusted R-Squared or

of Mallows' Cp statistic.

Ridge Regression

When the predictor

variables are highly correlated amongst themselves, the

coefficients of the resulting least squares fit may be

very imprecise. By allowing a small amount of bias in

the estimates, more reasonable coefficients may often be

obtained. Ridge regression is one method to address

these issues. Often, small amounts of bias lead to

dramatic reductions in the variance of the estimated

model coefficients.

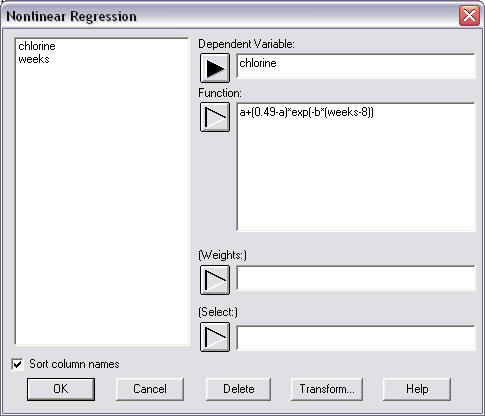

Nonlinear Regression

Most least squares

regression programs are designed to fit models that are

linear in the coefficients. When the analyst wishes to

fit an intrinsically nonlinear model, a numerical

procedure must be used. The STATGRAPHICS Nonlinear Least

Squares procedure used an algorithm due to Marquardt to

fit any function entered by the user.

Partial Least Squares

Partial Least

Squares is designed to construct a statistical model

relating multiple independent variables X to multiple

dependent variables Y. The procedure is most helpful

when there are many predictors and the primary goal of

the analysis is prediction of the response variables.

Unlike other regression procedures, estimates can be

derived even in the case where the number of predictor

variables outnumbers the observations. PLS is widely

used by chemical engineers and chemometricians for

spectrometric calibration.

General Linear Models

The GLM procedure is

useful when the predictors include both quantitative and

categorical factors. When fitting a regression model, it

provides the ability to create surface and contour plots

easily.

Life Data Regression

To describe the impact of

external variables on failure times, regression models may

be fit. Unfortunately, standard least squares techniques do

not work well for two reasons: the data are often censored,

and the failure time distribution is rarely Gaussian. For

this reason, STATGRAPHICS provides a special procedure that

will fit life data regression models with censoring,

assuming either an exponential, extreme value, logistic,

loglogistic, lognormal, normal or Weibull distribution.

Regression Analysis for

Proportions

When the response

variable is a proportion or a binary value (0 or 1),

standard regression techniques must be modified.

STATGRAPHICS provides two important procedures for this

situation: Logistic Regression and Probit

Analysis. Both methods yield a prediction equation

that is constrained to lie between 0 and 1.

Regression Analysis for Counts

For response

variables that are counts, STATGRAPHICS provides two

procedures: a Poisson Regression and a

Negative Binomial Regression. Each fits a loglinear

model involving both quantitative and categorical

predictors.

|